The Rise of GPUs in the AI Universe

GPUs are empowering data scientists and machine learning engineers to train their models in minutes which could potentially take days or weeks.

In this blog, we will implement one of the most common classification algorithms in machine learning: decision trees

We will first implement it in Python using the sci-kit learn library. This requires experience in using Python as a scripting language. Then, we will implement it on the AI & Analytics Engine which requires absolutely no programming experience. It is a drag and drop data science and machine learning platform for business users to data scientists - who want to quickly and seamlessly automate an accelerated insight extraction from data (Bonus: No prior coding experience required).

Don’t believe what you just read? You can get started with the platform without paying a dime.

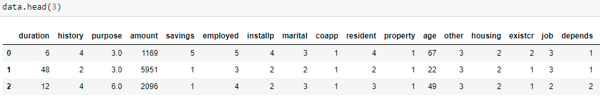

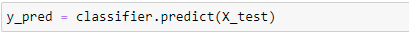

The dataset used contains a list of numeric features such as job, employment history, housing, marital status, purpose, savings, etc. to predict whether a customer will be able to repay a loan.

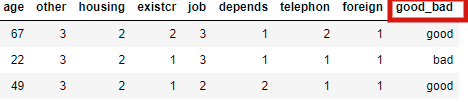

The target variable is ‘good_bad’. It consists of two categories: good and bad. Good means the customer will be able to repay the loan whereas bad means the customer will default.

It is a binary classification problem meaning the target variable consists of two classes only.

This is a very common use case in banks and financial institutions as they have to decide whether to give a loan to a new customer based on how likely it is that the customer will be able to repay it.

A decision tree is a supervised machine learning algorithm that breaks down a data set into smaller and smaller subsets while at the same time an associated decision tree is incrementally developed. The final result is a tree with decision nodes and leaf nodes.

A decision tree can handle both categorical and numerical data. Decision trees are the building units of more advanced machine learning algorithms such as boosted decision trees and, hence, serve as a baseline model for comparison for tree-based algorithms.

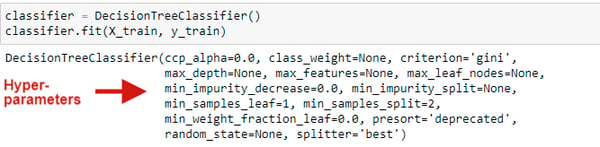

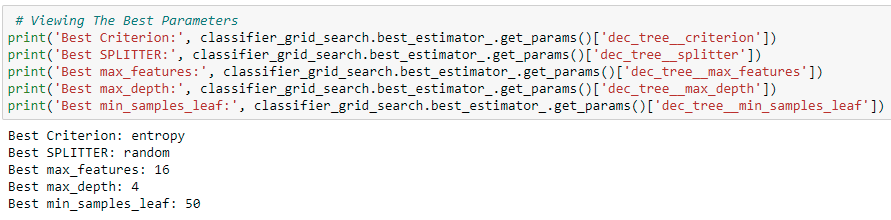

The performance of a machine learning model can be improved by tuning its hyperparameters. Hyperparameters are those parameters that the user has to set in advance. They are not learned by the data during training.

Some of the most common hyperparameters for a decision tree are:

Criterion: This parameter determines how the impurity of a split will be measured. Possibilities are ‘gini’ or ‘entropy.

Splitter: How the decision tree searches the features for a split. The default is set to ‘best’ meaning for each node, the algorithm considers all the features and chooses the best split. If it is set to random, then a random subset of features will be considered. The split will be made by the best feature within the random subset. The size of the random subset is determined by the ‘max_features’ parameter.

Max_Depth: This determines how deep the tree will be. The default is none and this often results in overfitting. The max_depth parameter is one of the ways in which we can regularize the tree, or limit the way it grows to prevent over-fitting.

Min_samples_split: The minimum number of samples a node must contain to consider splitting. The default is set to 2. This again is another parameter to regularize the decision tree.

Max_features: The number of features to consider when looking for the best split. By default, the decision tree will consider all available features to make the best split.

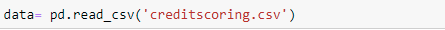

Let’s start by implementing a decision tree classifier on the dataset using Jupyter Notebook.

(You can view and download the complete Jupyter Notebook here. And the dataset 'creditscoring.csv' can be downloaded from here)

A list of all the libraries required to read the data, divide it into train & test data, build and evaluate the decision tree, optimize the hyperparameters using grid search, and plotting the ROC and Precision-Recall curves.

.png?width=800&name=Blog%20Images%20(46).png)

Let’s view the first three rows of our dataset:

Let’s have a look at our target variable ‘good_bad’:

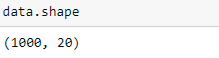

Our dataset consists of 1000 observations and 20 columns. Hence, we have 19 features and ‘good_bad’ is our target variable.

It’s always a good idea to explore your dataset in detail before building a model. Let’s see the data types of all our columns.

-png-1.png?width=800&name=Blog%20Images%20(47)-png-1.png)

We have 18 columns that are of type integer, 1 column that is of type float, and 1 column(our target variable ‘good_bad’) that is of type object meaning categorical.

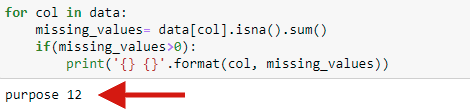

Always begin by identifying the missing values present in your dataset as they can hinder the performance of your model.

The only missing values we have are present in the ‘purpose’ column.

When solving a classification problem, you should ensure the classes present in the target variable should be balanced i-e there should be an equal number of examples for every class. A dataset with imbalanced classes results in models that have poor predictive performance, especially for the minority class. In many models, the minority or the extreme class is much more important from a business perspective and hence this issue needs to be taken care of while preparing the data.

An example would help us illustrate our point better.

There are two customers A and B. A has a good credit history and if given a loan would be able to repay the loan. However, our model predicts A to be a ‘bad’ customer, and hence he is refused a loan. This does hurt the bank since they lost a customer who would have been able to repay the loan and the bank could have made a profit.

Now let’s consider customer B. B has a bad credit history and files an application for a loan. In reality, B would not be able to repay the loan. However, the model predicts b to be a ‘good’ customer, and hence his loan application is approved. This is much more damaging for the bank since they would not be able to recover the loan.

These two examples illustrate why having balance classes can be so important from a business perspective.

.png?width=800&name=Blog%20Images%20(48).png)

Although the number of examples for good is slightly more than bad, it is not a severely imbalanced dataset and hence we can proceed with building our model. If for example, we had 990 examples for good and only 10 examples for bad, then that would have meant that our dataset is highly skewed and we should balance the classes.

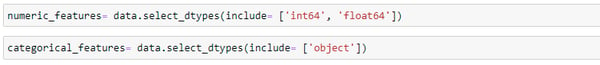

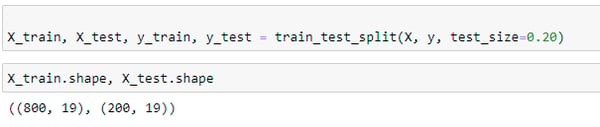

We begin by separating the features into numeric and categorical. The technique to impute missing values for numeric and categorical features is different.

For categorical features, the most frequent value occurring in the column is computed and the missing value is replaced with that.

For numeric features, there exists a range of different techniques such as calculating the mean value or building a model with the known values and predicting the missing values. The Iterative Imputer used below does exactly that

The missing values have been imputed for the ‘purpose’ column. Now we have zero missing values in our dataset.

.png?width=800&name=Blog%20Images%20(49).png)

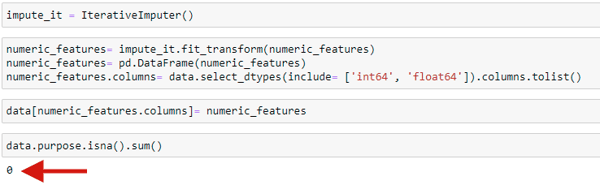

X has all our features whereas y has our target variable.

The decision tree model has been fit using the default values for the hyperparameters since we did not specify any hyperparameters yet. We will do that in the next section.

Decision trees are widely used to do feature selection. When you have many features and you do not know which one to include, a good way is to use decision trees to assess feature importance even though you might be using a completely different machine learning algorithm for your model.

.png?width=800&name=Blog%20Images%20(50).png)

From the plot, we can see that the most important feature in predicting whether a customer will repay a loan or not is the amount, followed by age, purpose, duration, and so on. The least important features that do not contribute much to the target variable is foreign, depends, coapp, resident.

To build a model with better predictive performance, we can remove the features that are not significant.

.png?width=800&name=Blog%20Images%20(51).png)

.png?width=800&name=Blog%20Images%20(52).png)

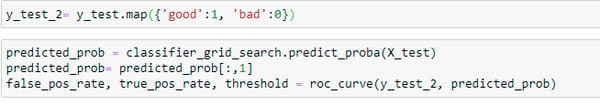

.png?width=800&name=Blog%20Images%20(53).png)

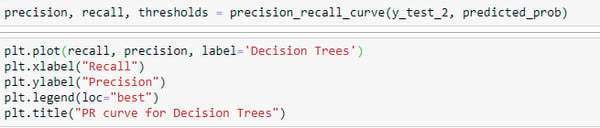

.png?width=800&name=Blog%20Images%20(55).png)

.png?width=1000&name=Blog%20Images%20(56).png)

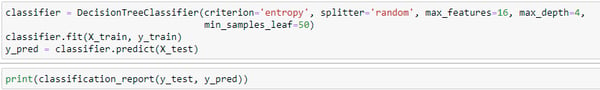

.png?width=800&name=Blog%20Images%20(57).png)

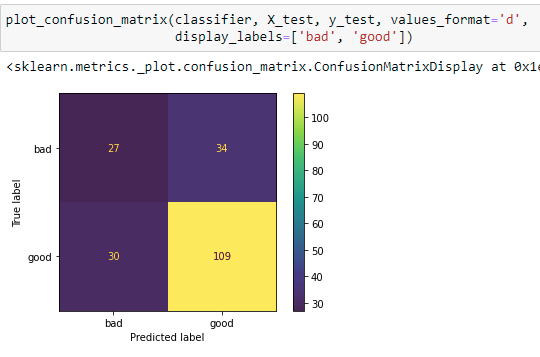

If you compare these new metrics with the old ones before the hyperparameters were tuned, you would see a significant improvement in precision and recall.

BAM!

And we are done with the Python implementation. That was quite a bit of code that you had to write and understand. For someone who is new to the realm of data science and machine learning, it might feel like a lot of information to digest at first glance.

The fear of not knowing how to code should not stop you from implementing data science.

We have built an all in one platform that takes care of the entire machine learning lifecycle. From importing a dataset to cleaning and preprocessing it, from building models to visualizing the results and finally deploying it, you won’t have to write even a SINGLE line of code. The goal is to make machine learning and data science accessible to everyone!

.png?width=800&name=Blog%20Images%20(58).png)

-1.png?width=800&name=Blog%20Images%20(59)-1.png)

.png?width=800&name=Blog%20Images%20(60).png)

.png?width=800&name=Blog%20Images%20(61).png)

The platform will automatically tell you which columns should be converted to categorical and which ones should be converted to numeric.

All you need to do is click on the + button right next to the suggestion, go to the recipe tab and click the commit button.

.png?width=800&name=Blog%20Images%20(63).png)

.png?width=800&name=Blog%20Images%20(64).png)

.png?width=800&name=Blog%20Images%20(65).png)

In Python, we had to write code and draw a bar plot to identify the most important features.

The AI & Analytics Engine makes use of AI to predict which columns are not significant in helping the model learn and hence suggests the user to remove the non-predictive columns.

.png?width=800&name=Blog%20Images%20(67).png)

Click on the commit button to implement the suggestions.

.png?width=800&name=Blog%20Images%20(68).png)

Click on the Finalize & End button to complete the data wrangling process.

.png?width=800&name=Blog%20Images%20(69).png)

The platform generates easy to understand charts and histograms for both the numeric columns and categorical columns to help you better understand the data and its spread.

.png?width=800&name=Blog%20Images%20(70).png)

Click on the + button and click on New App

.png?width=800&name=Blog%20Images%20(71).png)

Give your application a name and since it’s a classification problem, select Prediction and choose the target variable from the dropdown menu.

.png?width=800&name=Blog%20Images%20(72).png)

Click on the + button as shown below and select Train New Model

.png?width=800&name=Blog%20Images%20(73).png)

Select Decision Tree Classifier from the list of algorithms. As you can see, the platform includes all the machine learning algorithms that you can use without writing even a single line of code.

.png?width=800&name=Blog%20Images%20(74).png)

You can either select the Default configuration or the Advanced Configuration where you can tune your model by optimizing the hyperparameters.

.png?width=800&name=Blog%20Images%20(75).png)

A list of hyperparameters for the Decision Tree Classifier that we also optimized using GridSearch in Python.

.png?width=800&name=Blog%20Images%20(76).png)

Next, click on train Model and the platform will start the process of training your Decision Tree Classifier.

Our model has now been trained. It took about 5 minutes since we selected the advanced configuration in order to optimize the hyperparameters.

.png?width=800&name=Blog%20Images%20(77).png)

.png?width=800&name=Blog%20Images%20(78).png)

It’s now time to see how well how the model has learned from the data. For this, the platform has inbuilt capabilities to generate evaluation metrics such as confusion matrix, classification report, ROC curve & PR curve.

.png?width=800&name=Blog%20Images%20(79).png)

.png?width=1000&name=Blog%20Images%20(80).png)

.png?width=800&name=Blog%20Images%20(81).png)

.png?width=800&name=Blog%20Images%20(82).png)

In this blog, we implemented the decision tree classifier first in Python using the sci-kit learn library and then on the AI & Analytics Engine. With Python, you need to have knowledge about data cleaning, data visualization, and machine learning to implement the algorithm. On the contrary, AI & Analytics Engine does everything for you with the click of a few buttons. What can take hours in Python, only takes a few minutes on the AI & Analytics Engine.

Not sure where to start with machine learning? Reach out to us with your business problem, and we’ll get in touch with how the Engine can help you specifically.

GPUs are empowering data scientists and machine learning engineers to train their models in minutes which could potentially take days or weeks.

You, as a data scientist, will have to bear the pain of acquiring data from multiple systems & hence must be acquainted with the basics of data...

Introduction to Natural Language Processing: The objective of NLP is to read, decipher, understand, and make sense of human languages.