AI & Analytics Engine Tutorial 2/4: Data Preparation

Here, you will learn how to leverage the AI & Analytics Engine for data preparation to be consumed by an ML model in a streamlined and repeatable way.

Blowing out project timelines and adding a little grey to your hair along the way. It is no surprise then, that Amazon Web Services (AWS) recently announced the release of their new data preparation tool, AWS Glue DataBrew. We wanted to provide a comparative look at this new option in the market and identify the similarities and differences with the AI & Analytics Engine’s Smart Data Preparation feature.

So if you are looking for an AutoML tool to help claw back time from data preparation or data wrangling, read on...

First, a little background on both options.

AWS’s new data preparation tool belongs to the category of no-code easy-to-use visual data-preparation engine. It is primarily purposed as a tool for cleaning, normalization, and profiling of data as well as automation of recipe jobs. It can be viewed as a standalone tool for data preparation but can be integrated within AWS’ ecosystem of tools and services such as S3 or other AWS data lakes and databases for storage, import, and export of unprepared/prepared data.

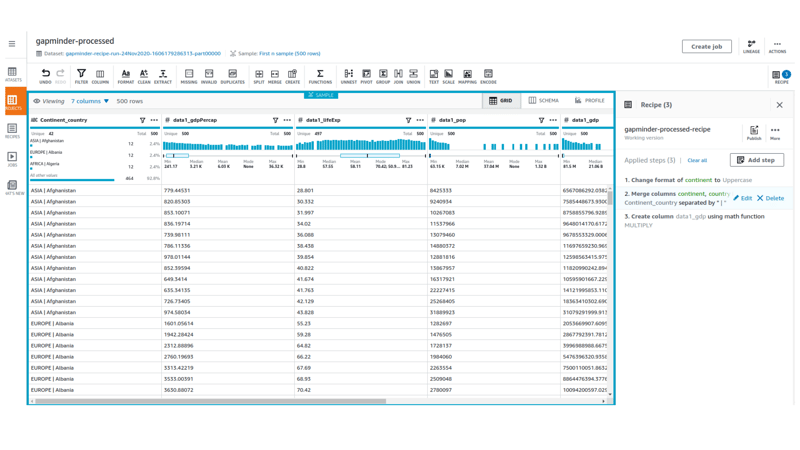

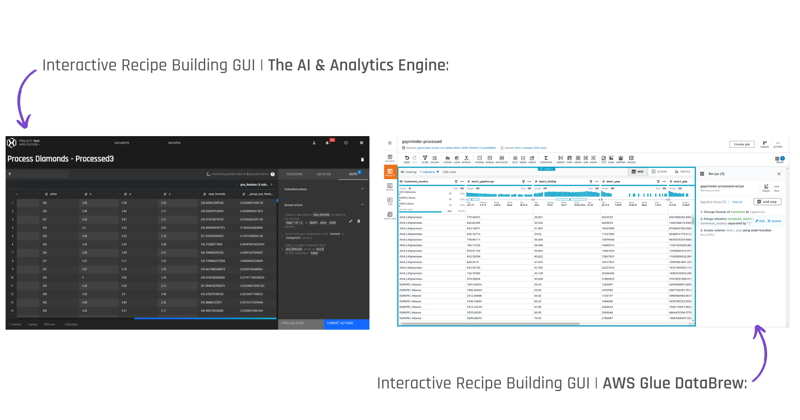

We decided to try out the platform to develop this article. Below is a screenshot of the ASW Glue DataBrew graphical user interface (GUI).

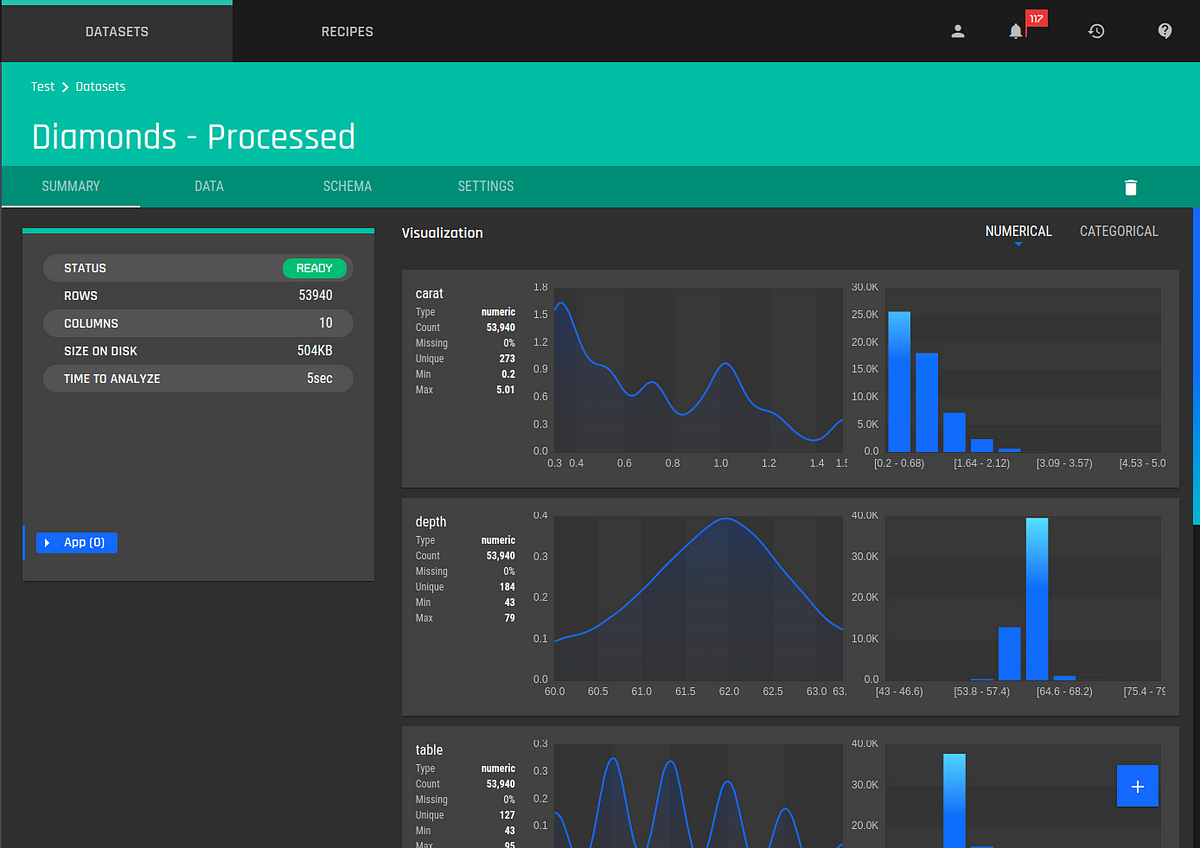

Within the AI & Analytics Engine, Smart Data Preparation is a fully interactive feature that lets users prepare their data at scale in a flexible manner.

The premise is that the user is guided by smart recommendations during the recipe-creation process. It offers a variety of “actions” (data transformation steps) through an action catalogue that offers the ability to fully customize and edit their data-preparation recipes. It covers the four stages of data preparation commonly required in analytics and machine learning tasks:

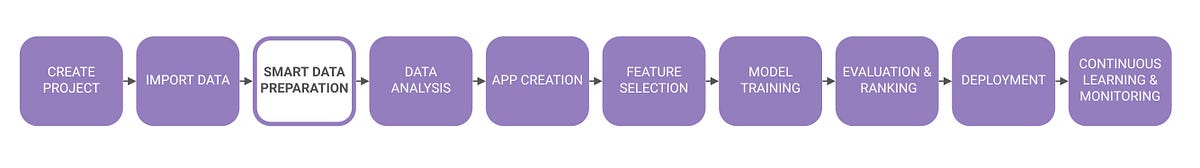

Rather than a standalone tool, the Smart Data Preparation feature is a tightly integrated functionality within the end-to-end user journey on the AI & Analytics Engine platform. It sits between the data import and app creation phases of the journey in a unified graphical user interface (GUI).

1. Import Data

2. Smart Data Preparation

.png?width=800&name=Add%20a%20subheading%20(1).png)

3. Inspect the Statistical Profile of the Finalized Dataset

4. Create app (Target variable selection, train/test split)

5. Select features

6. Select models to train

.png?width=800&name=Blog%20Images%20(1).png)

7. Deploy trained models

.gif?width=800&name=ezgif.com-gif-maker%20(3).gif)

Smart Data Preparation on the AI & Analytics Engine provides ease of use through the above seamless interface. In particular, there is:

The main similarity between the two tools is that they both cater to the need for an easy-to-use no-code interactive data preparation tool. Let's take a closer look:

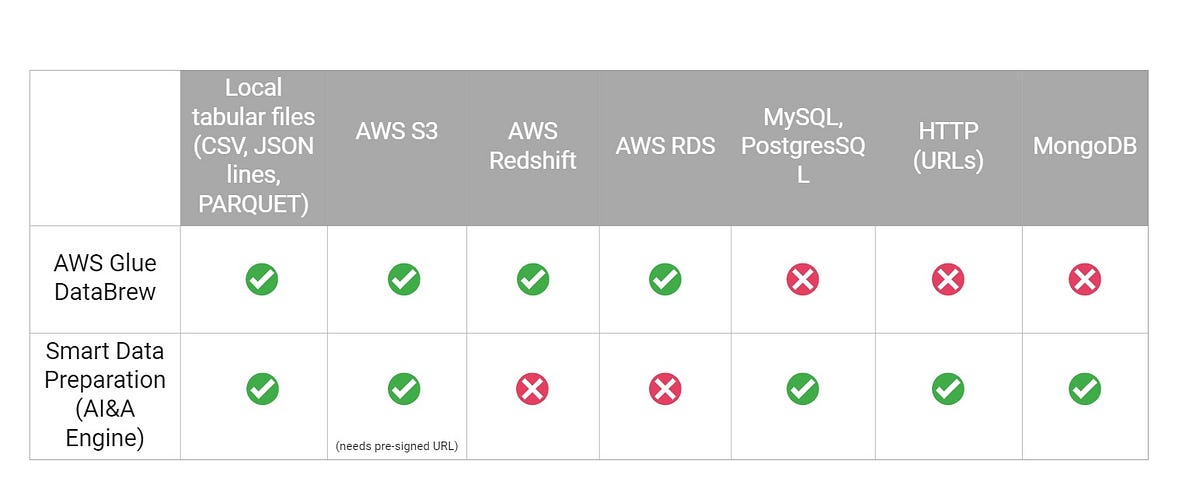

Both tools are targeted at tabular data files in the following formats: CSV, JSON (lines), and PARQUET.

While DataBrew allows the import of data mainly from data lakes on the AWS cloud such as S3, Redshift, and RDS — The Smart Data Preparation (AI & Analytics Engine) allows a diverse set of options for importing data, such as HTTP (URLs), SQL and NoSQL databases. Users can still upload data from cloud storage services such as S3 or GCS (google cloud) by getting a pre-signed URL for their dataset and using the HTTP option.

The outcome of the recipe-building process is a re-runnable recipe that can be re-used to transform a larger dataset of the same input schema.

Both AWS Glue DataBrew and the Smart Data Preparation feature have an interactive recipe-building user interface. There are many similarities between the two:

Whenever the list of actions in a recipe is modified, a validation check is applied to ensure that the recipe is legitimate. It consists of the following among many checks:

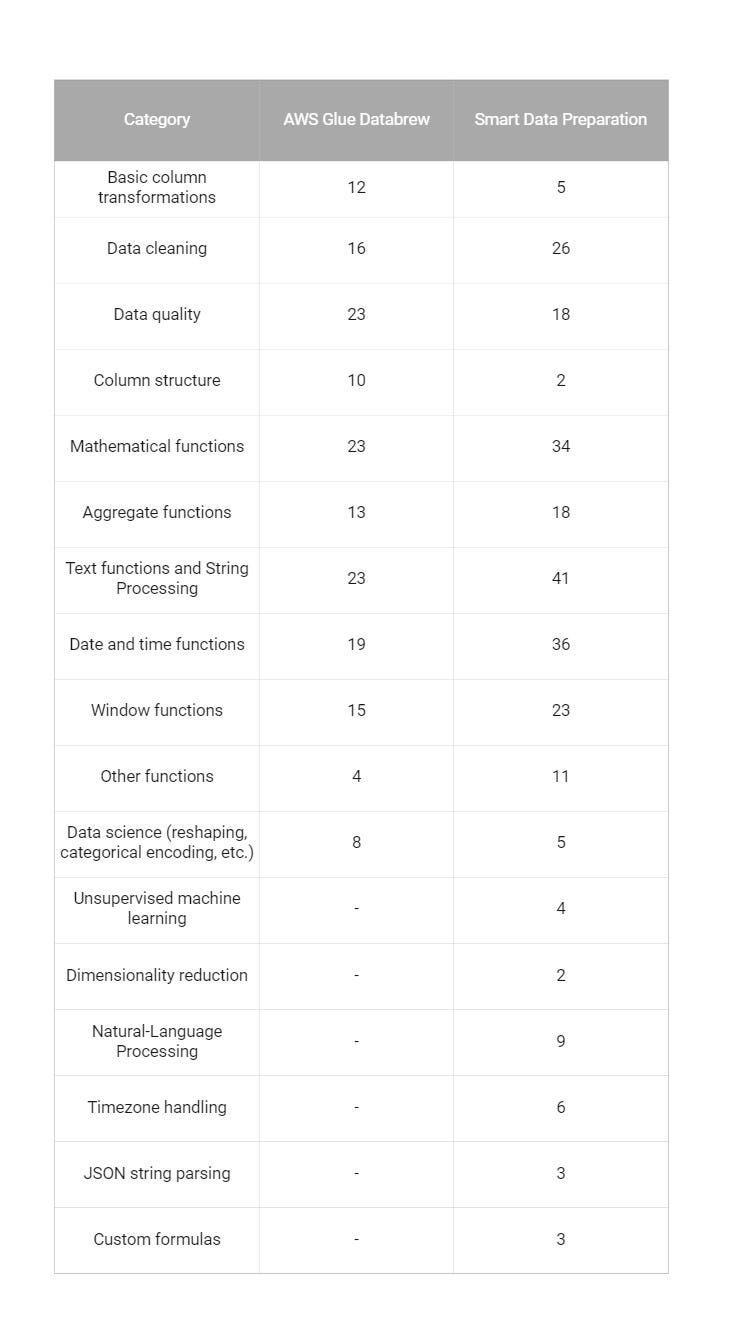

DataBrew’s official documentation of “Recipe actions reference” shows about 170 actions in their catalog. Comparably, we support 85 actions + 81 formula functions in our Smart Data Preparation feature.

The coverage areas of the actions also differ between the two platforms, as shown in the table below:

Some actions such as “pivot”, “Extract PCA components” etc. result in a “column group” rather than a column. A column group serves as a placeholder in the schema wherein one or more component columns can be generated by a recipe action. This enables:

Our Smart Data Preparation offers the ability for users to apply a transformation on multiple columns with a single action. To aid these, our graphical user interface and API provides the following modes of including/excluding input columns, column groups, or both into the selection:

The user is also allowed to combine multiple such criteria with the and/or operator. This enables full flexibility to let the user specify complex selection criteria such as “columns matching the name pattern ‘x_.*’, excluding non-numeric columns.”

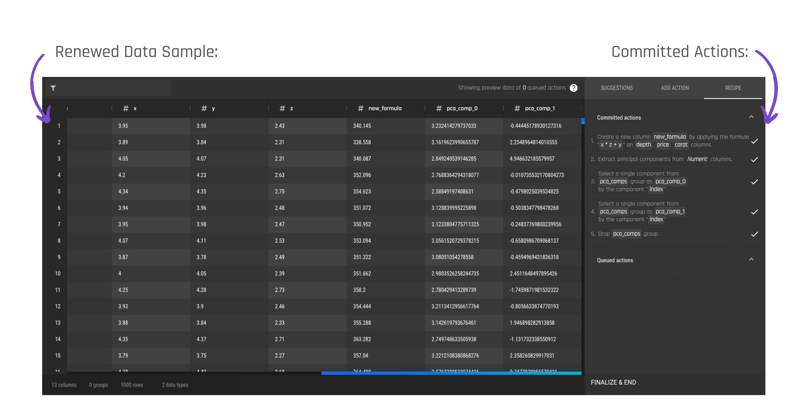

In The AI & Analytics Engine, whenever an action is added, it is “queued” to be committed to the recipe. Like in DataBrew, the queued actions are run on a fixed-size sample (first 5k rows) of the full dataset and the result is displayed as a preview. This serves as visual feedback to users, allowing them to change the configuration of their action to ensure that the preview represents what they desire as the result.

Our platform also provides an additional functionality enabled for the user, called the “committing” of the queued actions to a recipe, before continuing to edit the recipe further. Committing of actions signals the platform to run the recipe actions on the full dataset (rather than on the sample) and then show a renewed sample preview. This results in a more accurate data preview, where the sample was first generated from the raw data before the recipe actions were applied.

Committing actions to a recipe also provides the platform to run intelligent algorithms with the fully processed data to provide users with good recommendations for the next set of actions in their recipe.

In DataBrew, recommendations are generated on a per-column basis, and are not available by default. The user needs to click on a particular column and request recommendations corresponding to one column.

The AI & Analytics Engine’s Smart Data Preparation also provides recommendations of the next set of actions likely to be helpful to the user.

The key differences are that:

.png?width=800&name=Blog%20Images%20(45).png)

The pricing structure for both options is very different, keep in mind that you purchase DataBrew as a single tool and the AI & Analytics Engine as an end-to-end toolchain.

AWS Glue DataBrew: With AWS Glue Databrew's pricing is calculated on an hourly rate billed per second with additional costs based on tasks and region. It is really contextual to how you would use the platform so best check it out here: https://aws.amazon.com/glue/pricing/. You can also use their calculator.

"For the AWS Glue Data Catalog, you pay a simple monthly fee for storing and accessing the metadata. The first million objects stored are free, and the first million accesses are free. If you provision a development endpoint to interactively develop your ETL code, you pay an hourly rate, billed per second."

(https://aws.amazon.com/glue/pricing/)

The AI & Analytics Engine: There are four subscription tiers to cater to individual data users all the way through to enterprise options. A free trial for The AI & Analytics Engine is available currently for 2 weeks. For more information, you can check out PI.EXCHANGE’s AI & Analytics Engine pricing. The prices start from $129 USD p/month.

If you are after a tool to hasten the data preparation stage, of the data science process. Both options will assist in this endeavor. However, there are differences to consider that may mean that one option may fit your needs better than the other. The key differences are;

Not sure where to start with machine learning? Reach out to us with your business problem, and we’ll get in touch with how the Engine can help you specifically.

Here, you will learn how to leverage the AI & Analytics Engine for data preparation to be consumed by an ML model in a streamlined and repeatable way.

The Engine’s formula editor lets you easily use custom-made data wrangling formulas, to transform datasets in your data preparation step.

In this blog, we’ll show how to import data using the AI & Analytics Engine’s Google Sheets integration, so you can build machine learning models.