How to Affordably Upscale Your Small Business with Machine Learning

How do you upscale your current product offering affordably with ml, for small businesses? There are a few basic problems a startup will encounter.

Explore one of the most common and powerful classification algorithms in machine learning: gradient boosted decision trees.

We will implement XGBoost which has been making waves in the world of data science analytics and has become the go-to algorithm of most data scientists for predictive modelling. XGBoost stands for extreme gradient boosting.

In one of our earlier blogs, we implemented decision trees both with and without code using the same dataset that is used in this blog. Decision trees form the basis for more advanced machine learning algorithms such as gradient boosted trees. We recommend you to read that article first if you are not completely sure what are decision trees and how they work. Or to get started with no code machine learning, check out this article on Getting Started with Data Science with no Coding.

We will first implement XGBoost in Python using the sci-kit learn library. Then, we will implement it on the AI & Analytics Engine which requires absolutely no programming experience.

The dataset used contains a list of numeric features such as job, employment history, housing, marital status, purpose, savings, etc to predict whether a customer will be able to pay back a loan.

The target variable is ‘good_bad’. It consists of two categories: good and bad. Good means the customer will be able to repay the loan whereas bad means the customer will default. It is a binary classification problem meaning the target variable consists of two classes only.

This is a very common use case in banks and financial institutions as they have to decide whether to give a loan to a new customer based on how likely it is that the customer will be able to repay it.

Boosting in machine learning is an ensemble technique (ensemble means a group of items or things viewed as a whole rather than individually) that refers to a family of algorithms that convert weak learners to strong learners.

The main principle of boosting is to fit a sequence of weak learners to weighted versions of the data. More weight is given to examples that were misclassified by earlier rounds i-e the weighting coefficients are increased for misclassified data and decreased for correctly classified data.

The new models are built on the residuals of the previous models. We keep adding new models sequentially until no further improvements can be seen. The predictions are then combined through a weighted majority vote.

In XGBoost, the ensembles are constructed from individual decision trees. Hence, multiple trees are trained sequentially and each new tree learns from the mistakes of its predecessor and tries to rectify them.

XGBoost has a lot of hyperparameters to fine-tune and hence it is not always easy to optimize a model built using XGBoost. There is no definite answer to which hyperparameters should you ideally tune or what values of hyperparameters would give the best results.

Some of the most common hyperparameters for XGBoost are:

Max_Depth: This determines how deep the tree will be. The deeper the tree is, the more the model will overfit.

Learning_rate: Also called shrinkage or eta,learning_rate comes in handy to slow down the learning of the gradient boosted trees to prevent the model from overfitting

Gamma: The value of gamma dictates the minimum value of loss required to make a split. If a node split does not lead to a positive reduction in the loss function, that split won’t happen.

Lambda: Penalty factor that is used to put a constraint on the weights just like in ridge regression

Scale_pos_weight: This comes into play when there is a class imbalance in the target variable. If the classes are severely skewed, then a value of greater than zero should be used.

(You can view and download the complete Jupyter Notebook here. And the dataset 'creditscoring.csv' can be downloaded from here)

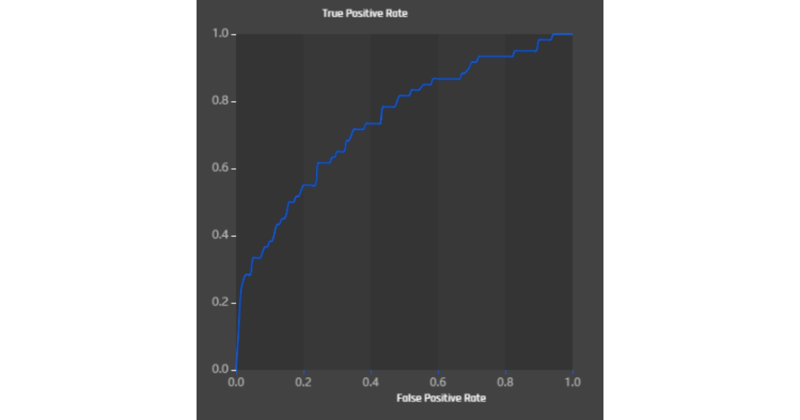

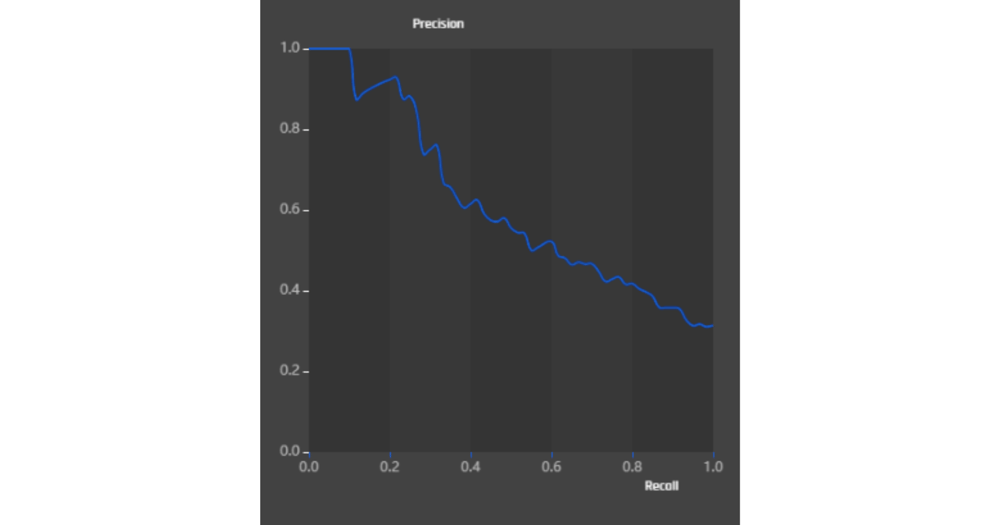

A list of all the libraries required to read the data, divide it into train & test data, build and evaluate the decision tree, optimize the hyperparameters using grid search, and plotting the ROC and Precision-Recall curves.

.png?width=800&name=Blog%20Images%20(83).png)

Let’s view the first three rows of our dataset:

Let’s have a look at our target variable ‘good_bad’

Our dataset consists of 1000 observations and 20 columns. Hence, we have 19 features and ‘good_bad’ is our target variable.

It’s always a good idea to explore your dataset in detail before building a model. Let’s see the data types of all our columns

.png?width=800&name=Blog%20Images%20(84).png)

We have 18 columns that are of type integer, 1 column that is of type float, and 1 column(our target variable ‘good_bad’) that is of type object meaning categorical.

Always begin by identifying the missing values present in your dataset as they can hinder the performance of your model.

The only missing values we have are present in the ‘purpose’ column.

When solving a classification problem, you should ensure the classes present in the target variable should be balanced i-e there should be an equal number of examples for every class. A dataset with imbalanced classes results in models that have poor predictive performance, especially for the minority class.

.png?width=800&name=Blog%20Images%20(85).png) Although the number of examples for good is slightly more than bad, it is not a severely imbalanced dataset and hence we can proceed with building our model. If for example, we had 990 examples for good and only 10 examples for bad, then that would have meant that our dataset is highly skewed and we should balance the classes.

Although the number of examples for good is slightly more than bad, it is not a severely imbalanced dataset and hence we can proceed with building our model. If for example, we had 990 examples for good and only 10 examples for bad, then that would have meant that our dataset is highly skewed and we should balance the classes.

We begin by separating the features into numeric and categorical. The technique to impute missing values for numeric and categorical features is different.

For categorical features, the most frequent value occurring in the column is computed and the missing value is replaced with that.

For numeric features, there exists a range of different techniques such as calculating the mean value or building a model with the known values and predicting the missing values. The Iterative Imputer used below does exactly that

.png?width=800&name=Blog%20Images%20(86).png)

The missing values have been imputed for the ‘purpose’ column. Now we have zero missing values in our dataset.

.png?width=1000&name=Blog%20Images%20(87).png)

X has all our features whereas y has our target variable.

Note: We have converted our target variable which was categorical into numeric since XG boost requires all inputs to be numeric. This is called Label Encoding.

.png?width=1000&name=Blog%20Images%20(88).png)

The decision tree model has been fit using the default values for the hyperparameters since we did not specify any hyperparameters yet. We will do that in the next section.

Just like Decision trees, XGBoost is a very powerful technique to do feature selection. When you have many features and you do not know which one to include, a good way is to use XGBoost to assess feature importance.

.png?width=800&name=Blog%20Images%20(89).png)

To build a model with better predictive performance, we can remove the features that are not significant.

(Comparison: You might want to compare the feature importance returned by the XG boost model vs the feature importance returned by the decision tree model here.)

.png?width=800&name=Blog%20Images%20(90).png)

.png?width=800&name=Blog%20Images%20(91).png)

.png?width=800&name=Blog%20Images%20(92).png)

.png?width=800&name=Blog%20Images%20(93).png)

.png?width=800&name=Blog%20Images%20(94).png)

.png?width=800&name=Blog%20Images%20(95).png)

.png?width=800&name=Blog%20Images%20(96).png) And we are done with the Python implementation. Now let’s do the same on the AI & Analytics Engine

And we are done with the Python implementation. Now let’s do the same on the AI & Analytics Engine

.png?width=800&name=Blog%20Images%20(97).png)

.png?width=800&name=Blog%20Images%20(98).png)

.png?width=800&name=Blog%20Images%20(99).png)

.png?width=800&name=Blog%20Images%20(100).png)

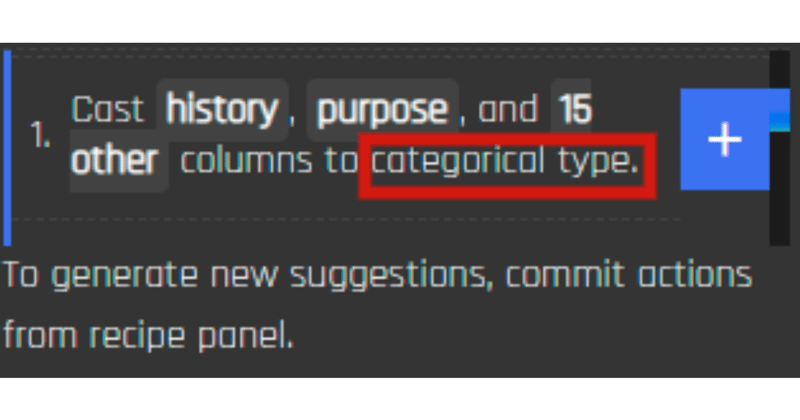

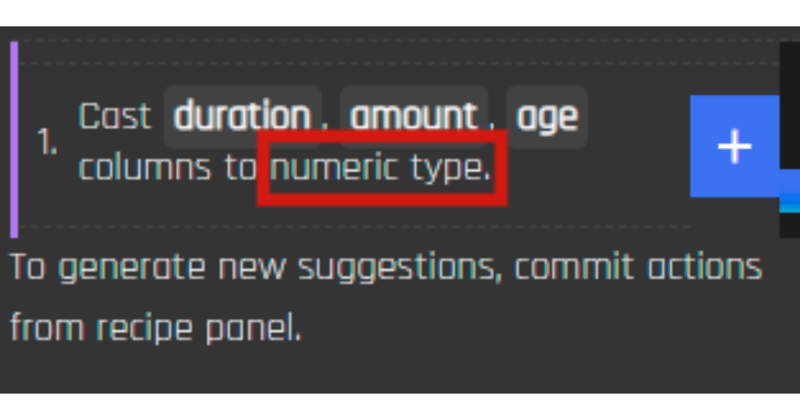

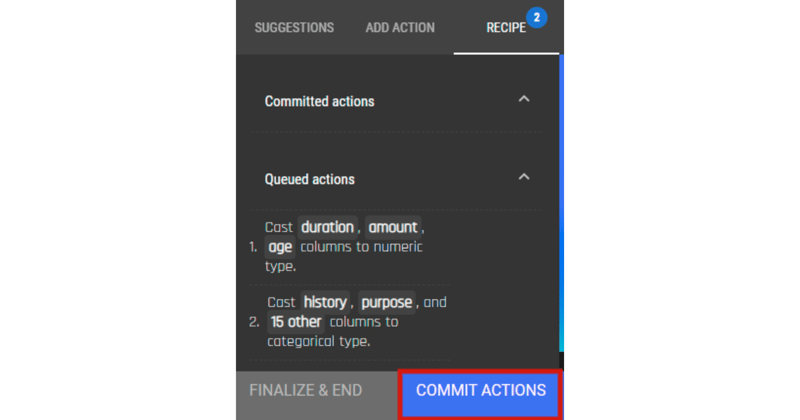

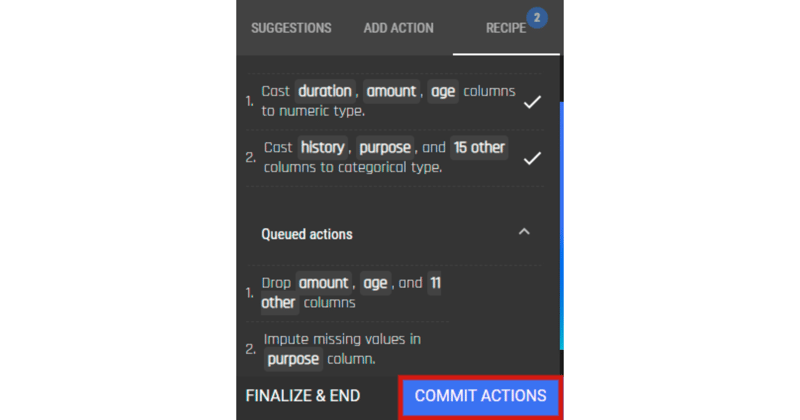

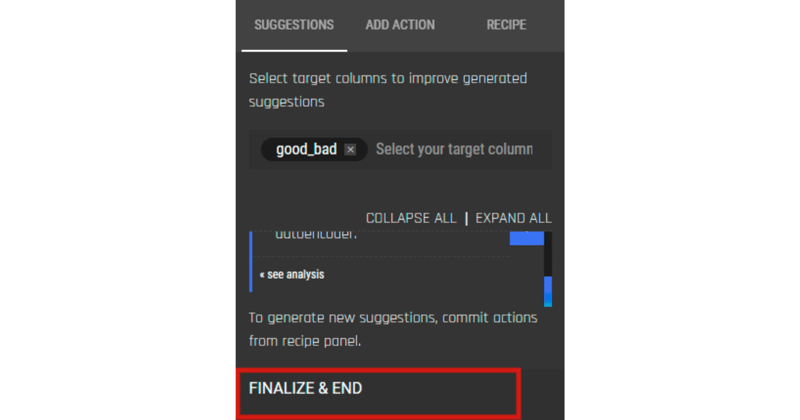

The platform will automatically tell you which columns should be converted to categorical and which ones should be converted to numeric.

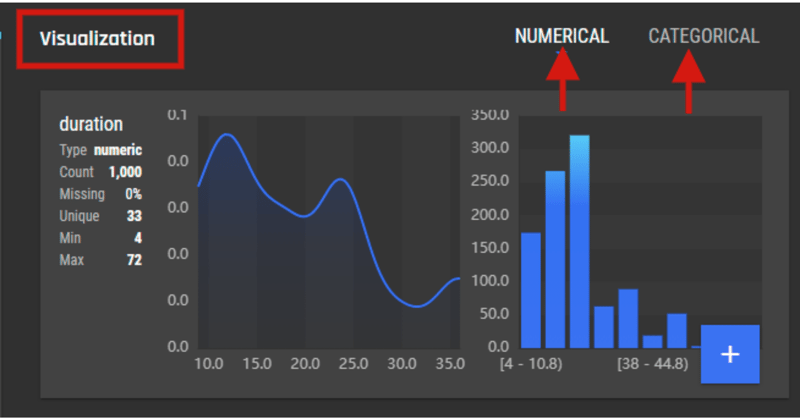

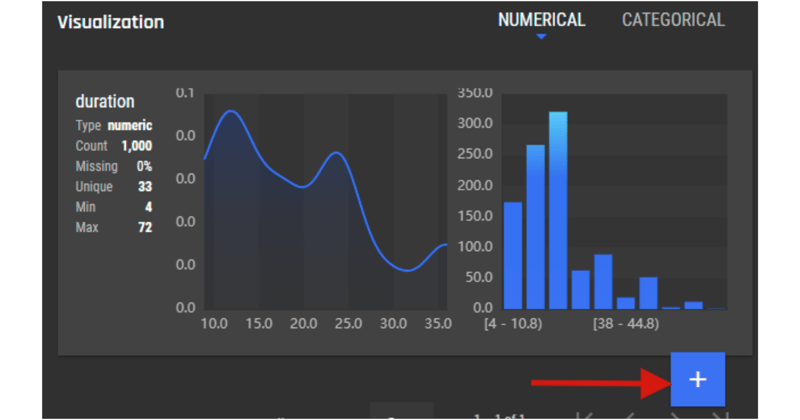

All you need to do is click on the + button right next to the suggestion, go to the recipe tab and click the commit button.

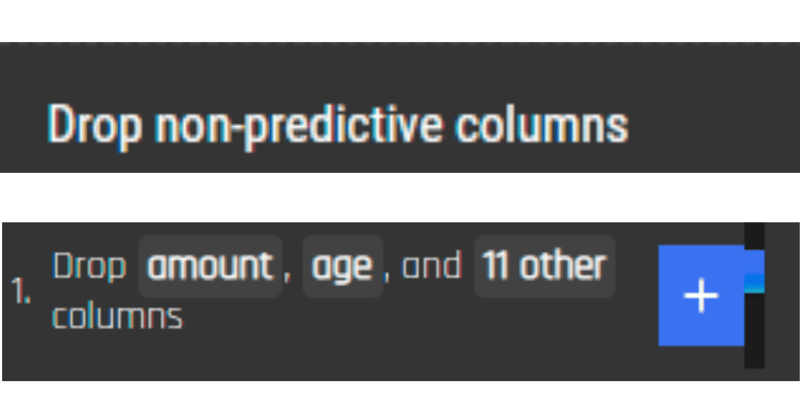

In Python, we had to write code and draw a bar plot to identify the most important features.

The AI & Analytics Engine makes use of AI to predict which columns are not significant in helping the model learn and hence suggests the user to remove the non-predictive columns.

Click on the commit button to implement the suggestions.

Click on the Finalize & End button to complete the data wrangling process.

The platform generates easy to understand charts and histograms for both the numeric columns and categorical columns to help you better understand the data and its spread.

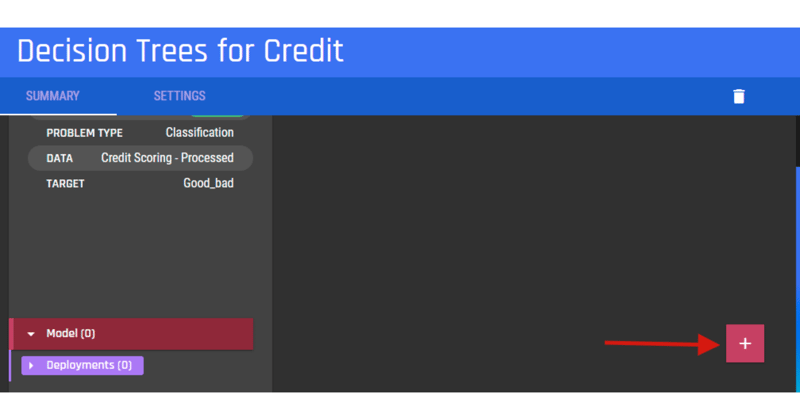

Click on the + button and click on New App

Give your application a name and since it’s a classification problem, select Prediction and choose the target variable from the dropdown menu.

Click on the + button as shown below and select Train New Model

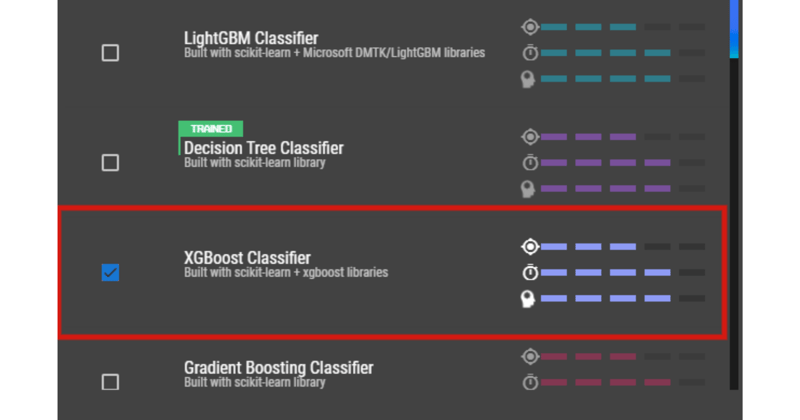

Select XG Boost Classifier from the list of algorithms. As you can see, the platform includes all the machine learning algorithms that you can use without writing even a single line of code.

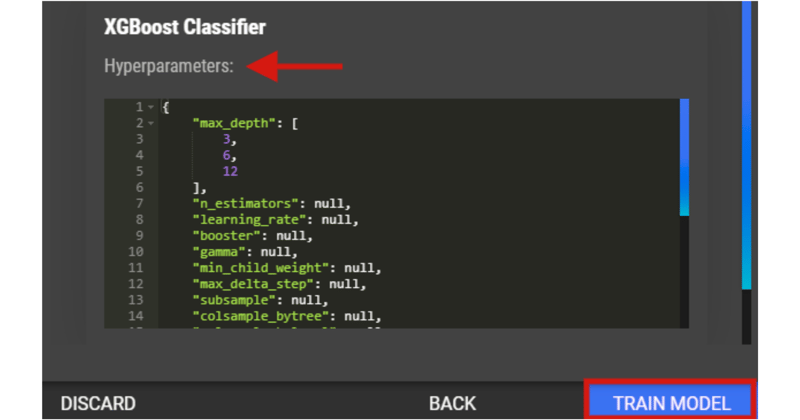

You can either select the Default configuration or the Advanced Configuration where you can tune your model by optimizing the hyperparameters.

A list of hyperparameters for the Decision Tree Classifier that we also optimized using GridSearch in Python.

Next, click on ‘Train Model’ and the platform will start the process of training your Decision Tree Classifier.

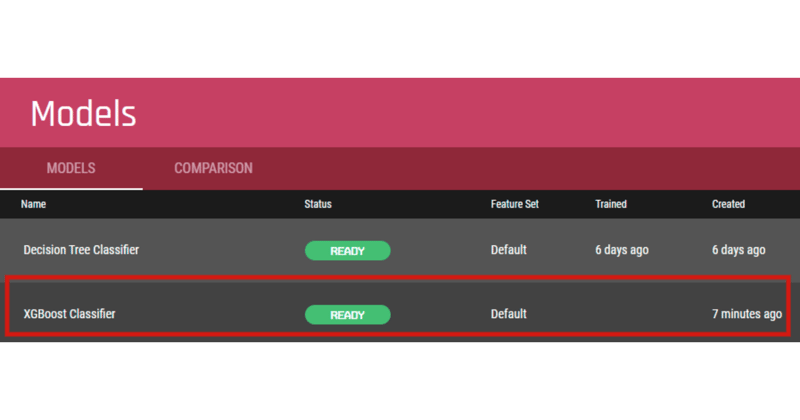

Our model has now been trained and is ready for evaluation.

It’s now time to see how well the model has learned from the data. For this, the platform has inbuilt capabilities to generate evaluation metrics such as confusion matrix, classification report, ROC curve & PR curve.

In machine learning, you usually have to build multiple models and then compare their evaluation metrics with each other to select the model that best serves your business use case. We have implemented the decision tree classifier on the same dataset. You might be interested in drawing a comparison between XG boost model and the decision tree model here.

Implementing XG Boost or any other machine learning algorithm on the AI & Analytics Engine is intuitive, straightforward and seamless. We believe that by making data science easier for more people to actively participate (yes, you non-coders), it represents an opportunity to empower the lives of everyday business users, and drive better data-led decision making.

.

Super short description about this feature but definitely not lengthy enough for a page. But maybe this one needs to be a little bit longer than the others.

How do you upscale your current product offering affordably with ml, for small businesses? There are a few basic problems a startup will encounter.

The field of AI aims to understand how humans interact and make decisions. This understanding expands to create machines that rival human competence.

Data cleaning, also referred to as data cleansing and data scrubbing is one of the most important steps in quality decision-making